—————————————————————————

keyboard_arrow_down

Cody Greco

Co-Founder and CTO

"We retrain and redeploy thousands of models daily, so choosing the right partner for model deployment is critical. We initially investigated SageMaker, but Modelbit’s performance paired with its ease of use was a game-changer for us."

Nick Pilkington

Co-Founder and CTO

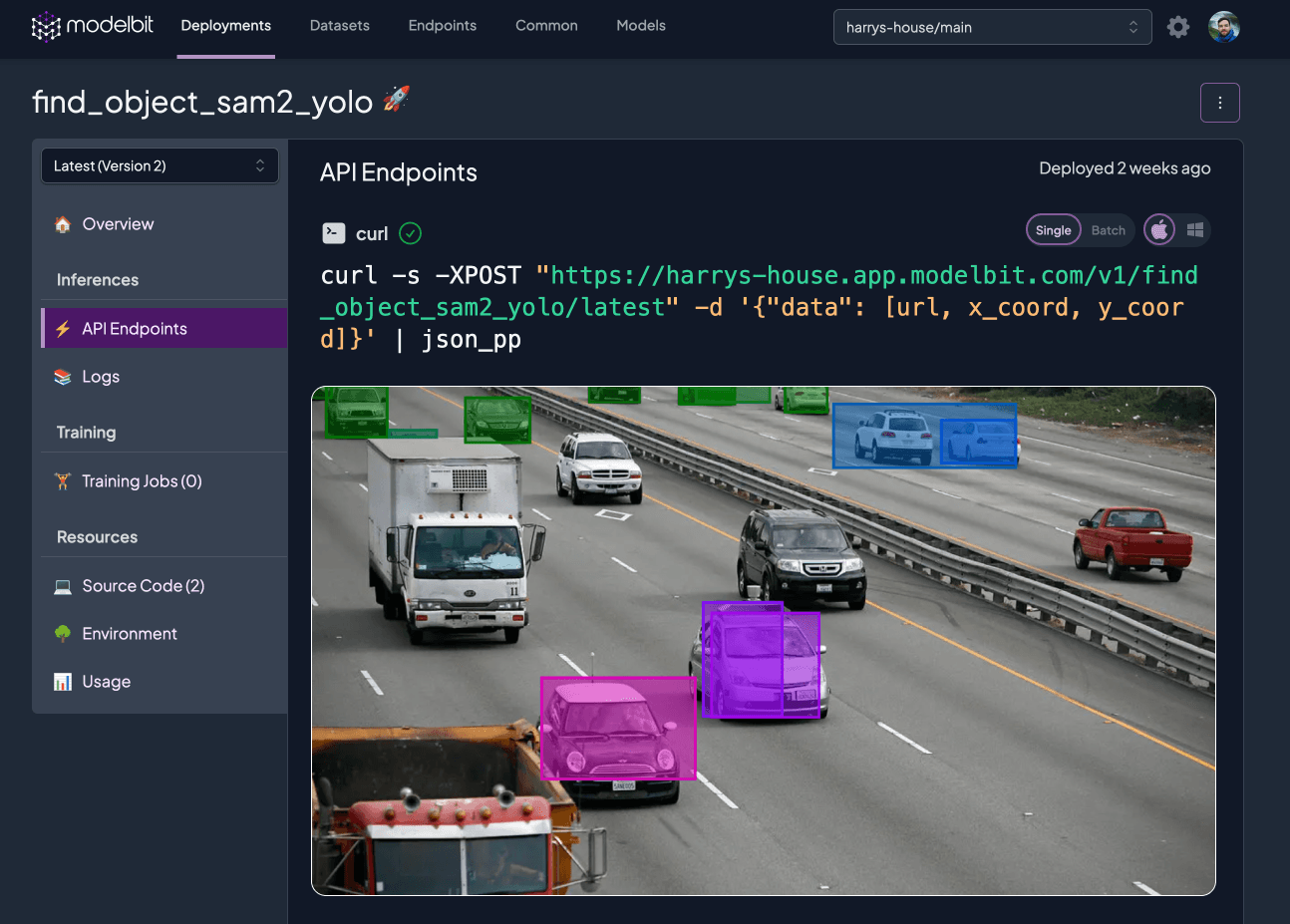

"Modelbit enabled us to easily deploy several large vision transformer-based models to environments with GPUs. Rapidly deploying and iterating on these models in Modelbit allowed us to build the next generation of our product in days instead of months."

Daniel Mewes

Staff Software Engineer

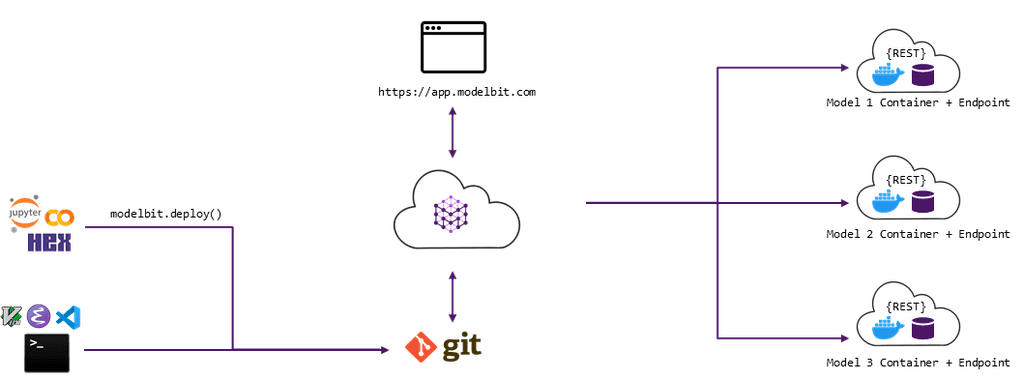

"With Modelbit, we’ve been able to experiment with new models faster than ever before. Once a model is up, we’re able to make iterations in a manner of minutes. It feels a bit like magic every time I make a change to our model code, push it to Github, and see it live on Modelbit just seconds later."