Introduction

OWL-ViT is a new object detection model from the team at Google Research. It allows you to identify an object in one image (the “query image”) and then find that same object in any number of target images. Building on Google’s foundational Transformers work, the model creates an embedding using the query image and the prompt (a bounding box). That embedding represents an object, and can be compared to objects found in any other image!

Google has released the OWL-ViT code as part of its scenic library. The self-documenting demo is a very slick interactive Colab notebook that lets you click-and-drag on one image, and then shows you the found object in subsequent images.

While the demo is very cool, it doesn’t necessarily help you deploy OWL-Vit into production! In a real production environment, you’ll want inference (object detection and getting embeddings from prompts) to run server-side. Whereas the click-and-drag or any other interaction to generate the prompt will likely run client-side.

In this post, we’ve separated OWL-ViT’s model API from the interactive Colab demo to show how you can call those APIs directly, and how you can deploy it to production. Once it’s in production, you can call the API with the URL of the query image, the bounding box of the image identified in the query image, and the URL of the source image. The REST API will return an array of boxes, each with coordinates, of the object found in the target image.

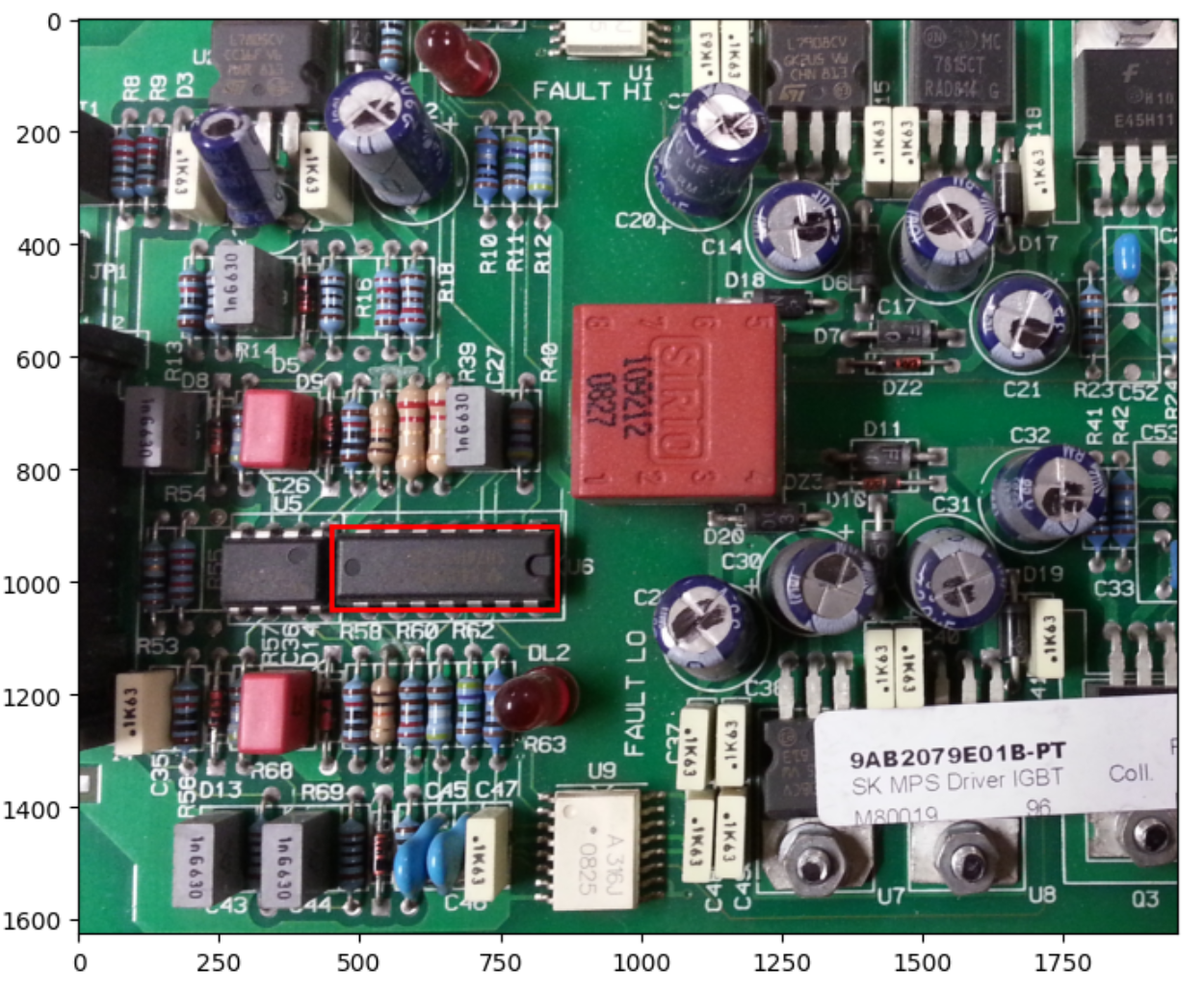

For our demo we’ll use Google’s supplied circuit board image and the bounding box of a microchip in the image:

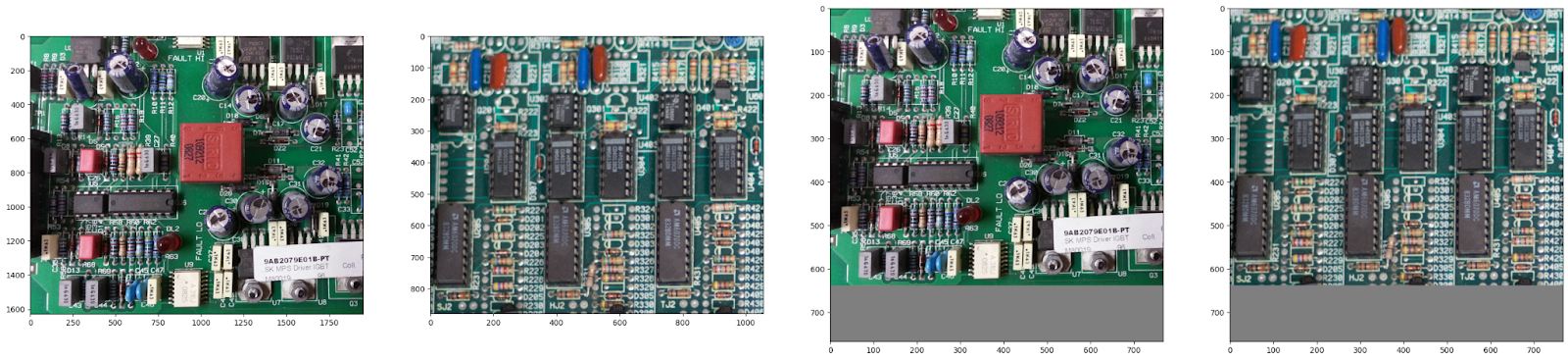

And we’ll use the API to find all the microchips in Google’s example processor board image:

.png)

Let’s get started!

Installation

We’ll get started by following the installation instructions in the OWL-ViT inference playground notebook.

The scenic package itself is distributed as a pip package from their GitHub repo. We can clear out the local files, clone the repo and install the scenic package:

Scenic comes bundled with many projects, of which OWL-ViT is just one. Each project comes with its own additional environment requirements. Let’s install OWL-ViT’s:

Additionally, OWL-ViT depends on Google Research’s big_vision library. This library is distributed as raw source code, so let’s check that out and put it in the Python path:

It’s worth noting that subsequent versions of bokeh have broken OWL-ViT, so let’s make sure we have a working version:

We’ll use Modelbit to deploy the model, so let’s install that too:

Finally, let’s proceed with the imports that we’re going to need to make this model a reality:

Building The OWL-ViT Model

We’ll use OWL-ViT out of the box for this project. So to get started, let’s just pull down the default model configuration and checkpoints and preload the model:

Working With The Model

To start, it’s important to understand how OWL-ViT works with images internally, and the format it expects for its bounding boxes. Here are our query and target images before and after OWL-ViT processes them:

On the left are the query and target images as we supplied them. They’re different sizes, as we would expect from real-world images. The query image is about 1600x1800 and the target image is about 900x1100. (Both OWL-ViT and matplotlib use (y, x) coordinate schemes so we will stick with that for this post.)

OWL-ViT insists on 800x800 square images. It will resize the images down to that size while preserving the aspect ratio, and fill the resulting empty space with gray pixels.

It then expects the prompt box in the format (y0, x0, y1, x1) where all four numbers are between 0.0 and 1.0. The top left coordinate is (0.0, 0.0) and the bottom right of the post-processed image including the gray pixels is (1.0, 1.0).

Fortunately, we can get the exact processed image from the model by calling "model.preprocess_image(original_image)". Once we have the dimensions for both the pre- and post-processed query images, we’ll need to translate the bounding box on the axis that is being padded, like so:

Then we’ll need to scale it to match the new 800x800 dimensions:

Finally we’ll need to scale it down to a number between 0.0 and 1.0:

Here’s the full code for scaling your bounding box from coordinates on your original query image to coordinates that the OWL-ViT model will work with:

Those with a very close eye for matplotlib will notice we use a helper function for easy drawing of bounding boxes on Matplotlib images. Here’s that code:

Getting Inferences

We want to supply image URLs to our API at request time, so that means our Python code will need to pull down the images from those URLs, like so:

Once we have a prompt_box from our prompt_box_for_query_image function above, we’ll use three calls to get the bounding boxes on the target image. Here they are:

Let’s take these in order. First, the call to "model.embed_image" returns bounding boxes for every single object found using OWL-ViT’s zero-shot detection on the target image. On this image, that’s over 2,000 objects and therefore 2,000 bounding boxes.

Next, the call to "model.embed_image_query" gets an embedding for the object found using the bounding box on the query image. This is super cool: We now have a representation of the microchip object from the query image that is portable across images! Once a microchip is found, we can look for a microchip in any supplied image.

Finally, by passing that embedding to "model.get_scores", we get back a score for every object in the target image. So of those 2,000+ bounding boxes, each one gets a score from 0 to 1 of how similar it is to the object represented by the embedding, i.e., the microchip.

Reformatting The Output Bounding Boxes

Unhelpfully, OWL-ViT returns its bounding boxes in an internal (cx, cy, w, h) format. Fortunately they supply a helper method to convert that to the (y0, x0, y1, x1) format between 0.0 and 1.0 that we supplied on the target image. Here’s that code:

But remember, these coordinates will be on the processed square image with the gray padding. We’ll need to scale those to real dimensions on the originally-supplied, non-processed target image in order to be useful to the callers of our API.

Fortunately, this is just a matter of discovering which axis has the larger multiplier to get to the original dimensions, and then multiplying both axes by the larger multiplier! This will take care of the translation and the scaling in one step:

To put it all together, we’ll insert the above scaling code into a loop that looks at every bounding box on the target image, picks out the boxes with high scores, and scales those boxes to real coordinates.

Putting It All Together

We’ve seen how to download the images from the supplied URLs, how to scale the input bounding box to a format the model expects, how to get inferences from the model, and how to scale those inferences back out to a useful format.

Here’s all the code put together in one inference function:

The output of this function is pretty damn cool:

.png)

Deploying The Model To REST

Now that we’ve got OWL-ViT code working in a deployable way, let’s deploy it to a production cloud environment! Fortunately, Modelbit makes this easy. First let’s login to Modelbit from our notebook:

And now let’s deploy it!

We simply pass the inference function, "owl_vit_for_object_detection", to "modelbit.deploy"!

We’ve made several optional choices in calling "mb.deploy" that are worth explaining here. First, we specify the git URL of the scenic package so that Modelbit will install it in production the same way we installed it in the notebook. Modelbit will also automatically install all of scenic’s specified dependencies, which is convenient.

Next, you’ll remember that we installed additional OWL-ViT dependencies and "big_vision" dependencies. We went ahead and manually specified the ones we’re using. Finally, since "big_vision" was installed as a raw source directory, we instruct Modelbit to install it the same way.

Now that we’ve deployed the model, Modelbit will give us REST as well as SQL APIs directly to the model!

.png)

We can call it from REST giving it the URLs of our query image and target image, and the bounding box for the object in our query image. The model will return the bounding boxes for the same object in the target image!

Taking a look at the Modelbit logs, we can eyeball the images themselves to confirm that the model is succeeding:

.png)

We also see it only took about two seconds to get inferences in production. Not bad! We can now use our REST API to pick out objects in one image and identify matching objects in any other image. All in just a couple of seconds.

Want more tutorials for deploying ML models to production?

- Tutorial for Deploying Segment Anything Model to Production

- Tutorial for Deploying OpenAI's Whisper Model to Production

- Tutorial for Deploying Llama-2 to a REST API Endpoint

- Deploying DINOv2 for Image Classification with Modelbit

- Tutorial for Deploying a BERT Model to Production

- Tutorial for Deploying ResNet-50 to a REST API

- Tutorial for Deploying OWL-ViT to Production

- Tutorial for Deploying a Grounding DINO Model to Production